- In this article, we will analyze what tasks A/B testing of mobile applications solves, how to conduct it, and why this is an effective way to study user behavior.

- What is app AB Testing?

- Why do A/B testing?

- Choice of feature options for experiment

- Step-by-step instructions how to prepare for AB testing

- Common mistakes in AB testing mobile apps

- How to do A/B test in Google Play

- How to do A/B test in App Store

- Conclusion

What is app AB Testing?

AB testing is a powerful tool for improving the results of your app marketing strategy. It lets you test two different versions of an element on its own, such as two different buttons or two distinct images. This technique involves randomly dividing your target audience into two groups and exposing each group to a different variant of your promotional campaign.

AB testing is a great way to improve your app marketing strategy, as you can use the data from these tests to make informed decisions about how to optimize your campaigns.

It’s important that you understand how A/B tests work before diving into them because they will help determine whether or not they are right for your business.

Why do A/B testing?

There are 3.59 million apps on the App Store and 2.33 million apps on Google Play. The growth of competition makes the interface clearer and the functionality more extensive.

A/B testing in app marketing is important because it allows app developers and marketers to optimize their marketing strategies and make data-driven decisions. The goal of A/B testing is to compare two or more variants of a marketing campaign to determine which one is the most effective in terms of achieving desired outcomes such as increased installs, higher engagement, or improved user retention. By conducting A/B tests, app marketers can:

- identify what works best for their target audience,

- make informed decisions about their marketing strategies,

- improve the overall success of their app,

- optimize in-app engagements,

- observe the impact of a new feature

- find the best description, title, and screenshots

A/B testing also helps eliminate the guesswork and assumptions from app marketing, enabling marketers to focus on strategies that have been proven to work and avoid wasting resources on strategies that don’t produce the desired results.

Choice of feature options for experiment

In a mobile application, you can test

- different keyword combinations and search queries,

- the same keyword in different search queries,

- different keywords, but keep them si;ilar enough that they’re likely to have a similar intent and audience,

- buttons – CTA,

- visual – images, icons, promo videos.

It is important to choose only one functionality and present it in several versions (not necessarily two). But the feature itself should be one, otherwise you will not be able to track what exactly attracted users. Test different keyword combinations and search queries.

For example, if you sell shoes, you might want to use different versions of “shoes,” such as “women’s shoes” or “men’s shoes.” This will help you get more traffic from people who are looking for specific types of shoes.

Step by step instruction how to prepare for AB testing

Preparing for A/B testing is almost half the solution to the problem. Properly conducted tests will help identify the problem, and the more accurately you determine it, the less money and time you will spend on fixing it. Below are the main steps for preparing and conducting A/B tests of mobile games and applications:

- Hypothesis preparation. Why is the app currently showing low conversion rates? The definition of possible problems is the basis for your hypotheses, which will be tested through testing. The source of data can be your opinion, the opinion of the team, or information from users (reviews will serve you well here as well). As a result of testing, you will have a clear understanding of which changes cause users to react positively and which cause them to react negatively.

- Define the elements you will be testing. Whether it will be a new icon, screenshots, description, or promo video on the application page. Design changes to the elements should be noticeable enough to users. Of course, consider the traffic to your application page. With low attendance, the accuracy of tests will not be high.

- Proper segmentation of users. It is important to remember that the groups must be the same in size, gender diversity, age and mobile device version. It is unacceptable that in different versions of the experiment, one group of users strongly prevails over another. For example, the ratio of men and women should be approximately the same, the number of users with a new version of the iPhone. If strong differences are allowed, the test results may be completely incorrect. also It’s important to keep in mind that a small audience size in A/B testing can result in inaccurate conclusions and hinder the optimization process for your app.

- Determine the duration of testing. The duration of the tests depends on the amount of traffic your application receives.

Common mistakes in AB testing mobile apps

Let’s list the main mistakes in A/B testing from our practice.

1.Ignore external factors. User behavior often changes depending on external factors. A classic example of such factors is seasonality:

- Food delivery or taxis work harder in bad weather;

- Dating apps are more active on Friday and Saturday;

- E-commerce is booming during the holidays.

Accordingly, it makes sense to compare samples from at least one seasonal range. It is also worth using those ranges where your main audience is represented.

2.Complete your A/B tests, even if early results are inconclusive—don’t settle for inconclusiveness. To ensure accuracy and build a high level of confidence in your findings, it’s essential to persevere with your tests and run them for a sufficient period of time. Cutting tests short too soon may result in misleading outcomes, hindering the progress and success of your marketing strategies.

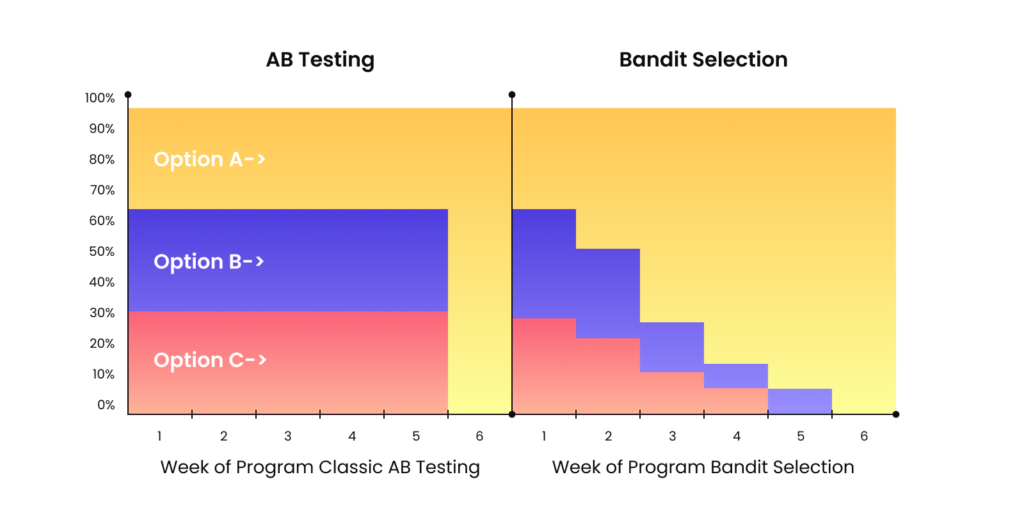

3.Just use the standard A/B test. In classic A/B tests, users are distributed equally into each group. Unless you’re a tech giant with a lot of data, every A/B test comes with testing costs. Throughout the experiment, we are forced to show economically disadvantageous product options. However, there are algorithms that allow you to change the division of traffic by options throughout the experiment. Among them, we can single out the Thompson algorithm—the use of Bayesian statistics in the problem of multi-armed bandits. Such an algorithm, at each step during the experiment, recalculates the winning probabilities for each option and sends traffic to where the probability of winning at this stage is highest.

As we can see in the picture, if option A wins, we will increase the proportion of users who get it the next day. If on the third day we see that he wins again, then we increase his share. As a result, the winning group rolls out 100%. The Bayesian bandit adapts to time and change. It saves time and money.

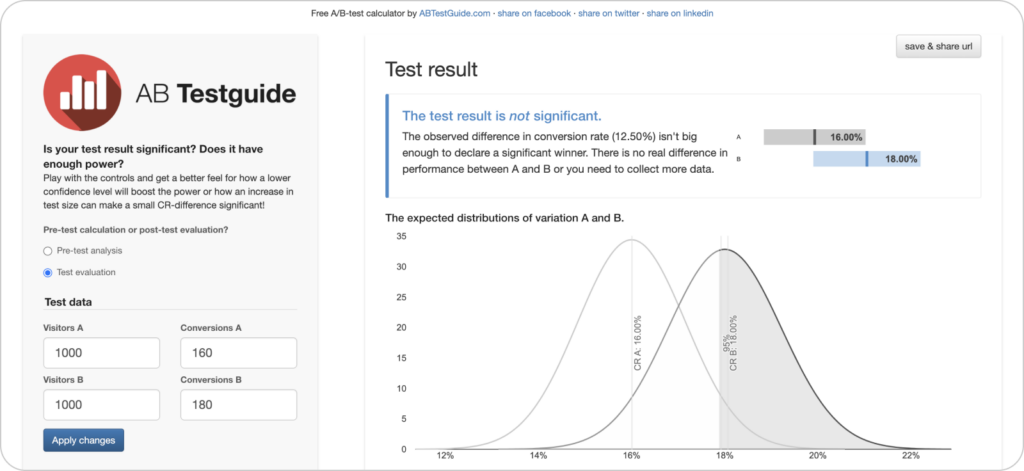

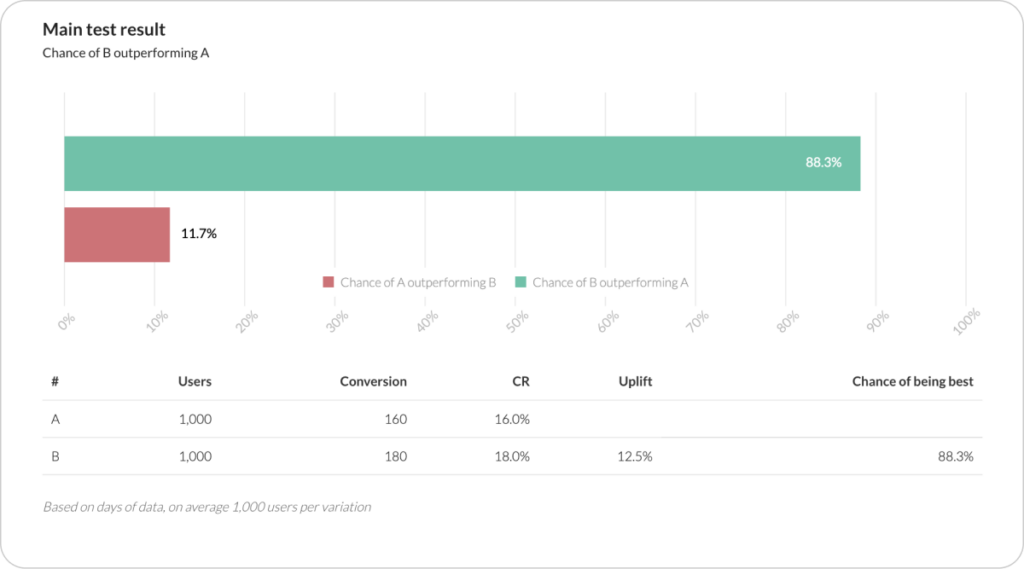

4.Don’t use statistical significance tools. This will help make your tests cheaper. For example, according to the test results, we have two groups: in group A, there were 160 conversions, and in group B, there were 180. Since the difference between the options is small, it is not clear how effective the changes we have made are.

Statistical significance comes to the rescue: it determines exactly how many users were affected by the change. Special calculators will help with the calculations.

When calculating, they use one of two approaches: classical frequency or Bayesian (I use abtestguide.com). The first shows the best of the tested options. The second shows you how one of the options is better than the others in percentage terms.

We see that he gives a clear answer: Option B is 88% more successful than Option A. With a Bayesian approach, we save time and money.

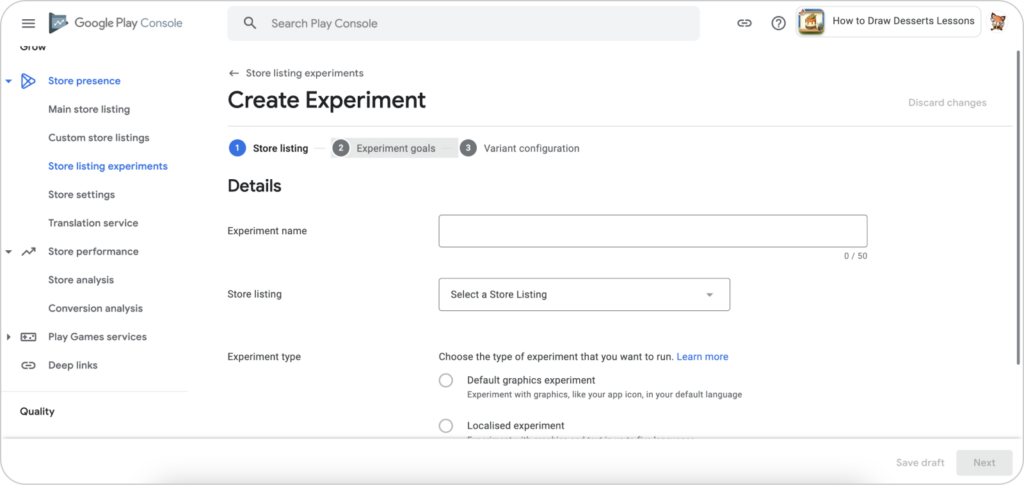

How to do A/B test in Google Play

You need to login to the Google Play Console, Store Listing Experiments.

Tests for Android are very convenient to carry out in the console itself, choosing from the proposed list – please note that you cannot test the Name in Google Play. You can compare up to three variants with the original version. You can only run one app page experiment per app at a time, and up to five experiments if you’ve added localized graphics assets in specific languages. The number of experiments is unlimited.

The Play Console’s help section contains more information on AB testing.

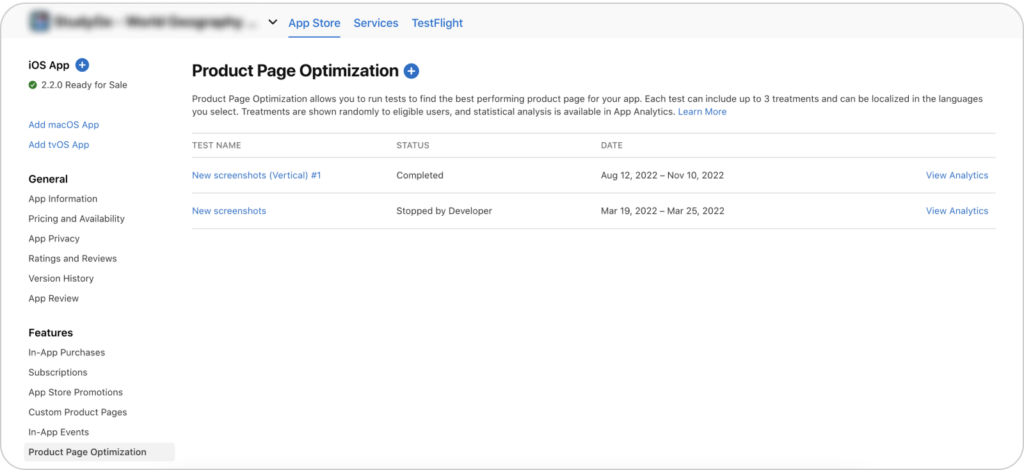

How to do A/B test in App Store

You need to login to the App Store Connect, Product Page Optimization.

The number of experiments is limited to three app product pages. You can select localizations, as all localizations will be selected by default. The duration of the tests will be 90 days, but they can be stopped manually. Please note that the product page test metadata must be reviewed by the store before the test starts.

Conclusion

You can’t always know what will work for your app, but A/B testing is a great way to figure out what does. It’s an important part of any app marketing strategy, and it can help you get more downloads and keep users coming back for more. A/B testing in ASO optimization has the ultimate goal of improving conversion rate and application visibility.

The A/B testing process can be divided into six steps, most of which involve preparation.

- Hypothesis preparation

- Define the elements you will be testing

- Proper segmentation of users

- Determine the duration of testing

- Run tests and collect data

- Analysis of results

The developer consoles in both stores provide all the opportunities for testing; the main thing is to follow the basic rules and avoid mistakes. If, at any point, you doubt the need for experimentation, then look at your indexing and conversion rates. If users can easily find your application for various keywords and you are at the top of the search results, but installation does not occur, then you should use our guide.