As an app marketer, you’re likely always looking for ways to drive more downloads. One of the most effective tools to achieve this is A/B testing. This marketing staple can generate substantial boosts in conversion rates, moving the needle from impressions to downloads when applied strategically for ASO (App Store Optimization). In this guide, we’ll break down what A/B testing for ASO entails, how to set up your first tests, and common pitfalls to avoid.

What Is A/B Testing in ASO?

A/B testing in ASO involves comparing two or more variations of a specific element on your app’s store page – such as alternate versions of screenshots – to determine which version resonates most with visitors. You control the percentage of store traffic exposed to each variant, but you can’t select the specific user profiles that participate.

By comparing the results, you can identify which version is most likely to drive app installs and optimize your page accordingly.

The Store Traffic Eligible for A/B Tests Includes:

- Visitors from the Browse/Explore tabs.

- Users who find your app via keyword searches.

- Any other traffic that lands on your app page.

Why Is A/B Testing Important for ASO?

A/B testing empowers you to make informed, data-driven decisions about your ASO strategy. Beyond optimizing your conversion rates, it allows you to better understand user behavior and expectations.

If a variant underperforms compared to the original, that too offers valuable insights about what doesn’t work – a takeaway that can shape your broader marketing efforts.

Preparing for Your First A/B Test

Launching your first A/B test may seem daunting, but success hinges on preparation. A well-defined hypothesis is critical before you begin.

Four Elements of a Strong Hypothesis:

- Define the Problem: Identify an issue on your current app page, whether surfaced by data analysis or user feedback. If you already know the solution, skip the test.

- Pinpoint the Element to Test: Determine which component – such as a screenshot or description – could be contributing to the problem.

- Design the Change: Clearly specify the modification you plan to make. For example, you might test removing or enhancing a particular element.

- Evaluate Visibility: Ensure the change is noticeable enough to influence user behavior. If the tested element isn’t viewed by at least 5% of visitors, it’s unlikely to yield meaningful insights.

Stick to testing one variable at a time. Changing multiple elements simultaneously makes it difficult to pinpoint which change caused the results.

Publishing Your First A/B Test on App Stores

Once your hypothesis is ready, follow these steps:

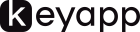

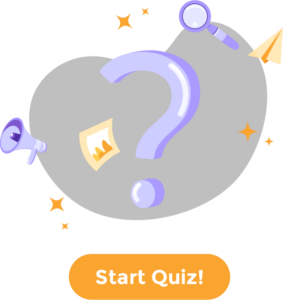

- Navigate to the A/B testing section in your store console (“Store Listing Experiments” on Google Play or “Product Page Optimization” for the App Store).

- Click “Create a Test” and follow the prompts.

Key Parameters to Set:

- Traffic Proportion: We recommend splitting traffic equally between the control and variants to ensure accurate results.

- Test Duration: Set an estimated duration for your test to gauge whether expectations align with outcomes. Tests will not automatically end when this duration is reached.

- Test Elements: Focus on one component at a time for precise insights.

Both Google Play and the App Store offer robust A/B testing tools, with slight variations.

Store Listing Experiments on Google Play

The Google Play Experiments engine is a popular choice for live A/B testing with store traffic. It supports testing:

- Creative elements (icons, promotional videos, feature graphics, screenshots)

- Short and long descriptions (but not the title)

You can test up to three variants alongside the original version, running one experiment per app at a time (or up to five with localized assets). These experiments can run indefinitely.

Benefits of Google Play A/B Testing:

- Identify impactful page elements.

- Understand market preferences based on language and region.

- Boost conversion rates with data-driven insights.

- Track seasonal trends for ongoing optimization.

Product Page Optimization on the App Store

Apple allows testing creative assets (icons, preview videos, and screenshots) for up to 90 days. You can test up to three variants against the original version and run localized tests across different languages.

Tips for Effective A/B Testing

Before starting, define your priorities. Should you focus on updating screenshots or adding a preview video? Identify which elements are most likely to enhance your app’s appeal.

Common A/B Testing Mistakes to Avoid

1. Ending Tests Too Soon

Prematurely ending tests can lead to inconclusive results. Store visitor behavior often varies between weekdays and weekends, so aim for a test duration of at least seven days—and ideally multiples of seven—to capture a full behavioral cycle.

2. Assuming Identical Results Across Stores

The design and user behavior on Google Play differ from those on the App Store. Don’t assume findings from one platform will automatically apply to the other.

3. Overlooking External Marketing Influences

Paid campaigns and other marketing activities can skew test results. These visitors may respond differently than organic users, distorting your conclusions.

By avoiding common mistakes and leveraging A/B testing effectively, you can unlock data-driven insights that fuel growth and drive more installs. Happy testing!